Dr. Alper Yegenoglu

Postdoc in Artificial Intelligence and Computational Neuroscience

SDL Neuroscience, Jülich Supercomputing Centre (JSC), Forschungszentrum Jülich, Jülich, Germany

Institute of Geometry and Applied Mathematics, Department of Mathematics, RWTH Aachen, Aachen, Germany

Biography

Alper Yeğenoğlu is a postdoc in artificial intelligence and computational neuroscience. He received his PhD (Dr.rer.nat) in computer science in 2023. His research interests include bio-inspired learning, meta-learning, neuro-architecture search, agent-based modelling and gradient-free optimization with population based techniques (metaheuristics) such as evolutionary algorithms.

- Artificial Intelligence

- Computational Neuroscience

- Meta-learning, learning to learn

- Neuro-architecture search, AutoML

- Gradient-free Optimization

- Metaheuristics

- Agent based modelling and swarm intelligence

PhD in computer science, 2023

RWTH Aachen

Skills

I do electronic music, especially synthwave. You can listen to my music at https://soundcloud.com/electric-courage and https://electriccourage.bandcamp.com/

Experience

Topics include:

- Gradient free optimization methods applied on

- Artificial and spiking neural networks (SNNs)

- Emergent self-organization and self-coordination in multi-agent systems steered by SNNs

- Meta-Learning and Multi-task learning with SNNs on high performance computing systems

Duties involved:

- Implementation of statistical analysis methods for electrophysiological and analog time series data

- Maintaining the Electrophysiology Analysis Toolkit Analysis Toolkit (Elephant)

- Supporting other scientists implementing additional analysis methods

Featured Publications

We show how a multi-agent system (ant colony) steered by Spiking Neural Networks establishes self-organization while foraging for food. We evolve the networks using a genetic algorithm and learning to learn. Our results depict emergent behavior, which we investigate.

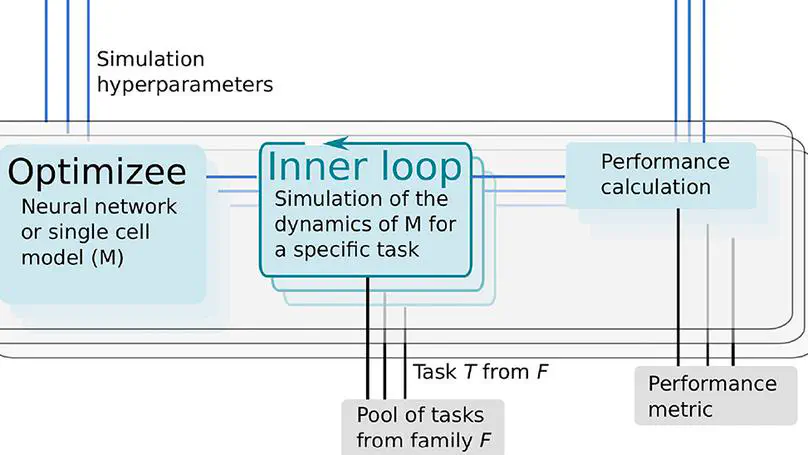

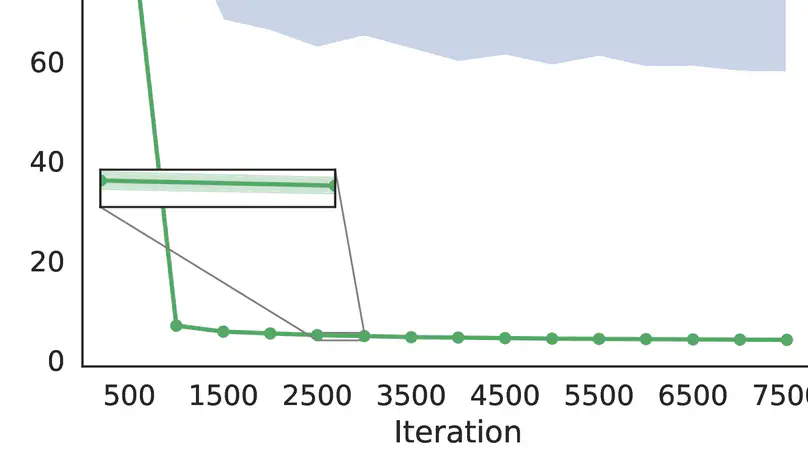

Understanding human intelligence, learning, decision-making, and memory has long been a pursuit in neuroscience. While our brain consists of networks of neurons, how these networks are trained for specific tasks remains unclear. In the field of machine learning and artificial intelligence, gradient descent and backpropagation are commonly used to optimize neural networks. However, applying these methods to biological spiking networks (SNNs) is challenging due to the binary communication scheme via spikes.In my research, I propose gradient-free optimization techniques for artificial and biological neural networks. I utilize metaheuristics like genetic algorithms and the ensemble Kalman Filter (EnKF) to optimize network parameters and train them for specific tasks. This optimization is integrated into the concept of learning to learn (L2L), which involves a two-loop optimization procedure. The inner loop trains the algorithm or network on a set of tasks, while the outer loop optimizes the hyperparameters and parameters. Initially, I apply the EnKF to a convolutional neural network, achieving high accuracy in digit classification. Then, I extend this optimization approach to a spiking reservoir network using the Python-based L2L-framework. By analyzing connection weights and the EnKF’s covariance matrix, I gain insights into the optimization process. I further enhance the optimization by integrating the EnKF into the inner loop and updating hyperparameters using a genetic algorithm. This automated approach suggests alternative configurations, eliminating the need for manual parameter tuning. Additionally, I present a simulation where SNNs guide an ant colony in food foraging, resulting in emergent self-coordination and self-organization. I employ correlation analysis methods to understand the ants’ behavior. Through my work, I demonstrate the effectiveness of gradient-free optimization techniques in learning to learn. I showcase their application in optimizing both biological and artificial neural networks.

Neuroscience models commonly have a high number of degrees of freedom and only specific regions within the parameter space are able to produce dynamics of interest. This makes the development of tools and strategies to efficiently find these regions of high importance to advance brain research. Exploring the high dimensional parameter space using numerical simulations has been a frequently used technique in the last years in many areas of computational neuroscience. Today, high performance computing (HPC) can provide a powerful infrastructure to speed up explorations and increase our general understanding of the behavior of the model in reasonable times. Learning to learn (L2L) is a well-known concept in machine learning (ML) and a specific method for acquiring constraints to improve learning performance. This concept can be decomposed into a two loop optimization process where the target of optimization can consist of any program such as an artificial neural network, a spiking network, a single cell model, or a whole brain simulation. In this work, we present L2L as an easy to use and flexible framework to perform parameter and hyper-parameter space exploration of neuroscience models on HPC infrastructure. Learning to learn is an implementation of the L2L concept written in Python. This open-source software allows several instances of an optimization target to be executed with different parameters in an embarrassingly parallel fashion on HPC. L2L provides a set of built-in optimizer algorithms, which make adaptive and efficient exploration of parameter spaces possible. Different from other optimization toolboxes, L2L provides maximum flexibility for the way the optimization target can be executed. In this paper, we show a variety of examples of neuroscience models being optimized within the L2L framework to execute different types of tasks. The tasks used to illustrate the concept go from reproducing empirical data to learning how to solve a problem in a dynamic environment. We particularly focus on simulations with models ranging from the single cell to the whole brain and using a variety of simulation engines like NEST, Arbor, TVB, OpenAIGym, and NetLogo.

The successful training of deep neural networks is dependent on initialization schemes and choice of activation functions. Non-optimally chosen parameter settings lead to the known problem of exploding or vanishing gradients. This issue occurs when gradient descent and backpropagation are applied. For this setting the Ensemble Kalman Filter (EnKF) can be used as an alternative optimizer when training neural networks. The EnKF does not require the explicit calculation of gradients or adjoints and we show this resolves the exploding and vanishing gradient problem. We analyze different parameter initializations, propose a dynamic change in ensembles and compare results to established methods.